Logistic Regression

- Purpose

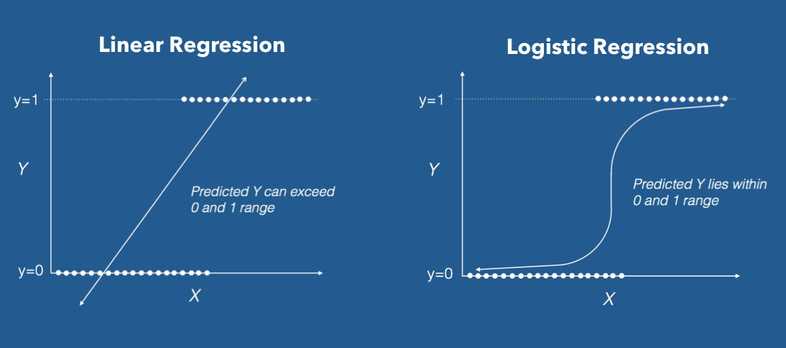

- Linear Regression vs Logistic Regression

- Examples of Logistic Regression

- Binary classification

- Building a logistic regression model in Python

- Odds and Log Odds

- Summary

- Model Building

- Summary - Multivariate Logistic Regression

- Summary - Steps of Building a Classification Model

- Takeaways

- References

Purpose

My notes on Logistic Regression

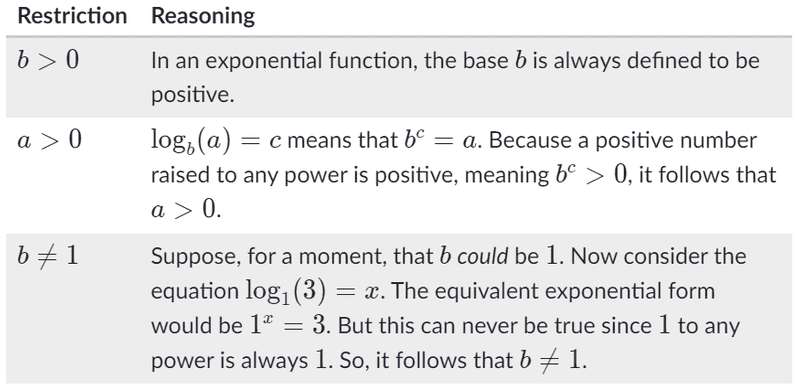

Exponential Functions

Laws of Exponents

Logarithm Property

Logarithm helps us redefine exponents. means what power does b have to be raised to, to get a.

where

- b is base

- c is exponent

- a is argument

Note

When rewriting an exponential equation in log form or a log equation in exponential form, it is helpful to remember that the base of the logarithm is the same as the base of the exponent.

Read more about logarithms here and here

Solving Equations containing exponents

Q:

Properties of Logarithms

Product Property

where a,b,c are positive numbers,

Quotient Property

where a,b,c are positive numbers,

Power Property

where c is a real number, a and b are positive numbers,

More Rules

Linear Regression vs Logistic Regression

| Linear Regression | Logistic Regression |

|---|---|

| numeric output | categorical output |

Examples of Logistic Regression

- Finance Company want sto know whether a customer will default or not

- Predicting an email is spam or not

- Categorizing email into promotional, personal and official

Binary classification

- Two possible outputs

-

Examples:

- customer will default or not

- email spam or not

- a user is a robot or not

Sigmoid function

sigmoid curve has all the properties you would want in a binary classification problem and hence it's good for such models.

- extremely low values in the start

- extremely high values in the end

- intermediate values in the middle

Formula

Best fit Sigmoid Curve

Likelihood function Product of for i = 1 to N, is called the Likelihood function

Building a logistic regression model in Python

We can use statsmodel to build a logistic regression model

The coef column gives us the value of and

Odds and Log Odds

This equation: is difficult to interpret.

We can simplify it by using Odds which is given as:

We interpret odds as:

Moreover, with every linear increase in X, the increase in odds is multiplicative

The Log Odds can then be obtained by applying (i.e. natural log or log with base e or )

As we can see the RHS is the equation of a straight line, hence Log Odds follows a linear relationship w.r.t X.

Summary

- Simple boundary decision approach is not good for classification problems

- Sigmoid function gives us a good approximation of the probability of one of the classes being selected based on the input

- The process where you vary the betas until you find the best fit curve for the probability of an event occurring, is called logistic regression.

- The equation for likelihood is complex, and hard to interpret, hence we use Odds and Log Odds

- The Log Odds vs X graph is linear in nature as it follows the equation of a straight line

Multivariate Logistic Regression

Why checking Churn Rate is Important

The reason for having a balance is simple. Let’s do a simple thought experiment - if you had a data with, say, 95% not-churn (0) and just 5% churn (1), then even if you predict everything as 0, you would still get a model which is 95% accurate (though it is, of course, a bad model). This problem is called class-imbalance and you'll learn to solve such cases later.

Why we only use transform on test set

The 'fit_transform' command first fits the data to have a mean of 0 and a standard deviation of 1, i.e. it scales all the variables using:

Now, once this is done, all the variables are transformed using this formula. Now, when you go ahead to the test set, you want the variables to not learn anything new. You want to use the old centralisation that you had when you used fit on the train dataset. And this is why you don't apply 'fit' on the test data, just the 'transform'.

Read more here.

Model Building

The steps for building a model are similar for Linear Regression and Logistic Regression

- Load and Prepare the Data

- Perform

EDAon the Data to identify missing values and outliers - Create dummy variables for Categorical Variables

- Identify any highly correlated values using

heatmapand drop such values based on business needs - Divide data in Training and Test Set

- Scale your training set using

MinMaxScalerorStandardScaler(using fit_transform()) - Extract Xtrain and ytrain and build the model using

statsmodelfor statistical analysis (sm.GLM()) - If there are too many features and we need automated feature elimination via

RFE, we will usesklearnmodule - Once we have eliminated a certain number of features, we will use the information and select the columns which

RFEhas chosen to perform manual feature selection on the remaining set - To perform manual feature selection, we will check the p-values and

VIFscore

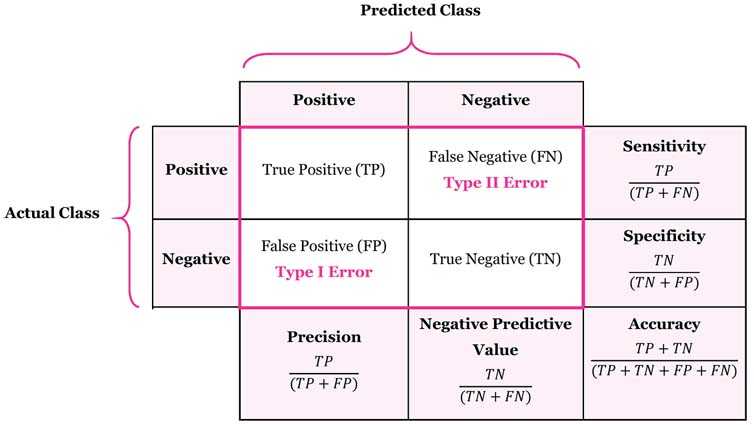

Confusion Matrix and Accuracy

| Actual | Predicted-No | Predicted-Yes |

|---|---|---|

| No | 1406 | 143 |

| Yes | 263 | 298 |

Accuracy

Ex:

Creating confusion matrix

Interpreting the Model

Questions

If all variables are same for two customers (A & B), except 'PaperLessBilling' which signifies whether customer has opted for PaperLessBilling billing (1) or not (0) and A has opted for PaperLessBilling, which of them has the higher log odds?

- Log Odds is given by:

- Hence, we can say that for customer A, Log Odds will be higher as will be 1 for customer A, while it will be 0 for customer B.

What does Higher Log Odds imply?

- Higher the Log Odds, higher the chances of the event occurring. This is because, as the number increases, its log increases and vice versa.

Given previous history of customers, identify which customer will like the new show most

- Convert information from table to dictionaries

- Iterate over dictionaries

- Get the value of Log Odds by forming the required equation

Summary - Multivariate Logistic Regression

- Multivariate Logistic Regression is an extension of single variable logistic regression similar to Linear Regression (add more coefficients)

- It is important to check the distribution of data in terms of how much percentage of values does each bin (odds of happening vs odds of not happening) have so that the model does not become biased

- Model building process is similar to Linear Regression

- Confusion matrix is used for finding out accuracy

Metrics

In classification, you almost always care more about one class than the other. On the other hand, the accuracy tells you model's performance on both classes combined - which is fine, but not the most important metric.

Consider another example - suppose you're building a logistic regression model for cancer patients. Based on certain features, you need to predict whether the patient has cancer or not. In this case, if you incorrectly predict many diseased patients as 'Not having cancer', it can be very risky. In such cases, it is better that instead of looking at the overall accuracy, you care about predicting the 1's (the diseased) correctly.

Similarly, if you're building a model to determine whether you should block (where blocking is a 1 and not blocking is a 0) a customer's transactions or not based on his past transaction behaviour in order to identify frauds, you'd care more about getting the 0's right. This is because you might not want to wrongly block a good customer's transactions as it might lead to a very bad customer experience.

Hence, it is very crucial that you consider the overall business problem you are trying to solve to decide the metric you want to maximise or minimise

Confusion Matrix

| Actual | Predicted-No | Predicted-Yes |

|---|---|---|

| No | True Negatives | False Positives |

| Yes | False Negatives | True Positives |

Accuracy

Sensitivity

- This is also called as

True Positive Rate - This is also called

signal - We want to maximise this ratio

Specificity

False Positive Rate

- This is same as (1 - Specificity)

- This is also called

Noise - We want to minimise this ratio

Negative Predictive Value

Positive Predictive Value

- This is also know as

Precision

Receiver Operating Characteristic Curve - ROC Curve

- Plot between False Positive Rate (x-axis) and True Positive Rate (y-axis) is termed as the ROC curve.

- It shows the trade-off between sensitivity and specificity

- It will always start at (0,0) and end at (1,1)

- When the value of TPR (on the Y-axis) is increasing, the value of FPR (on the X-axis) also increases.

- Model should have Low FPR and High TPR

- A good ROC curve is the one which touches the upper-left corner of the graph. The AUC will be higher for such a curve.

- The closer the curve comes to the 45-degree diagonal of the ROC space, the less accurate the test. The AUC will be lower for such a curve.

- Higher the area under the curve (AUC) of an ROC curve, the better is your model

- The least area of ROC curve is 0.5 (x=y)

- The highest area of ROC curve is 1 (y=1, x={0,1})

- If there are spikes in the ROC curve, it means that the model is not stable

- As can be seen from the graph, better the classification (i.e. higher separation between classes), higher the AUC.

Questions

Q: Given the ROC curves of 3 models A, B & C, which model is the best?

- The Area under Curve for C is the largest, hence it is the best model among the three.

Q: Let's say that you are building a telecom churn prediction model with the business objective that your company wants to implement an aggressive customer retention campaign to retain the 'high churn-risk' customers. This is because a competitor has launched extremely low-cost mobile plans, and you want to avoid churn as much as possible by providing incentives to the customers. Assume that budget is not a constraint. Which of Sensitivity, Specificity, and Accuracy will you choose to maximise?

Sensitivity: high sensitivity implies that your model will correctly identify almost all customers who are likely to churn. It will do that by over-estimating the churn likelihood, i.e. it will misclassify some non-churns as churns, but that is the trade-off we need to choose rather than the opposite case (in which case we may lose some low churn risk customers to the competition).

Precision

- probability that a predicted 'yes' is actually 'yes'

- formula is same as Positive Predicted Value

- The curve for precision can be jumpy because the denominator is not constant, it changes based on the threshold

Recall

- probability that 'yes' case is predicted as such

- formula is same as True Positive Ratio / Sensitivity

- detection rate

Note: Increase Precision => Decrease Recall and vice-versa

Questions

Q: Calculate F1-Score given the confusion matrix below

| Actual / Predicted | Not Churn | Churn |

|---|---|---|

| Not Churn | 400 | 100 |

| Churn | 50 | 150 |

- F1-Score is the harmonic mean of precision and recall

Summary - Steps of Building a Classification Model

-

Data cleaning and preparation

- Combining dataframes

- Handling categorical variables

- Mapping categorical variables to integers

- Dummy variable creation

- Handling missing values

- Test-train split and scaling

-

Model Building

- Feature elimination based on correlations

- Feature selection using RFE (Coarse Tuning)

- Manual feature elimination (using p-values and VIFs)

-

Model Evaluation

- Accuracy

- Sensitivity and Specificity

- Optimal cut-off using ROC curve

- Precision and Recall

- Predictions on the test set

Takeaways

- There is a trade off between TPR and FPR or Sensitivity and Specificity

- When building a Logistic Regression model, we will perform the steps of data cleaning, EDA, Feature Selection, train-test split, scaling. These are steps that are to be performed for any model.

- In Classification Models, we should look at statistics such as Specificity, Sensitivity, Precision and Recall. As per business understanding, we may have to prefer one metric over the other. Generally, the optimal cut off will be the one where the values for specificity, and sensitivity are similar.

- Precision-Recall and Specificity-Sensitivity are two views of the same problem and the choice depends on the business.

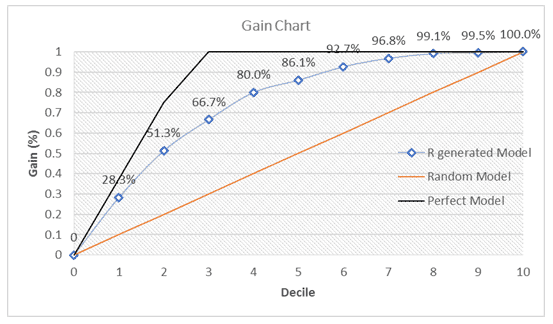

Measures of Discriminative Power

Sheet Demonstrating Gain, Lift and KS Statistic

- Note: Download this file as an excel to view the charts properly

Gain

- Create a model

- Sort by probability of churn

- Create buckets

- Findd cumulative probability

- Generate Gain Chart

Lift

- Create a Random Model

- Compare random model vs our model

- Lift tells you the factor by which your model is outperforming a random model, i.e. a model-less situation

- Formula:

KS Statistic

- The KS statistic is an indicator of how well your model discriminates between the two classes.

- The KS statistic gives you an indicator of where you lie between the random and perfect model

-

A good model will be such that:

- \ge 40% KS Statistic

- lies in the top deciles, i.e. 1st, 2nd, 3rd or 4th

- It is caclualated as {% cumulative churn - % cumulative non-churn} for every decile

References

- https://www.mathplanet.com/education/algebra-1/exponents-and-exponential-functions/properties-of-exponents

- https://www.mathplanet.com/education/algebra-2/exponential-and-logarithmic-functions/logarithm-property

- https://www.rapidtables.com/math/algebra/Logarithm.html

- https://www.khanacademy.org/math/algebra2/x2ec2f6f830c9fb89:logs/x2ec2f6f830c9fb89:log-intro/v/logarithms?modal=1

- https://datascience.stackexchange.com/questions/12321/whats-the-difference-between-fit-and-fit-transform-in-scikit-learn-models

- https://towardsdatascience.com/receiver-operating-characteristic-curves-demystified-in-python-bd531a4364d0

- https://en.wikipedia.org/wiki/Harmonic_mean